Today I’m happy to release a tool for low-level analysis of file systems, which includes digging through file system structures that aren’t normally visible when exploring your disks, looking at metadata of files and folders that isn’t normally accessible, and even browsing file systems that aren’t supported by Windows or your PC.

Download FileSystemAnalyzer

This is actually the software that I use “internally” to test and experiment with new features for DiskDigger, but I thought that it might be useful enough to release this tool on its own. It accesses the storage devices on your PC and reads them at the lowest level, bypassing the file system drivers of the OS.

On the surface, this software is very simple: it allows you to browse the files and folders on any storage device connected to your PC, and supports a number of file systems, including some that aren’t supported by Windows itself. However, the power of this tool comes from what else it shows you in addition to the files and folders:

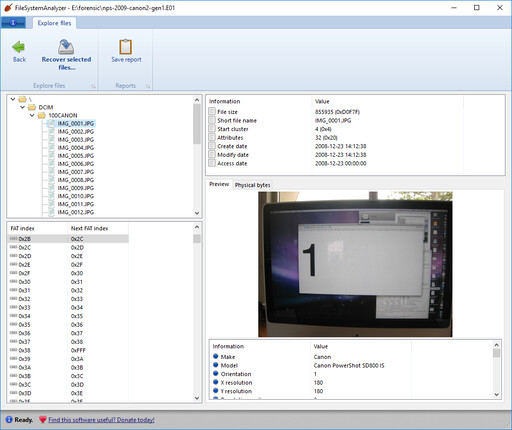

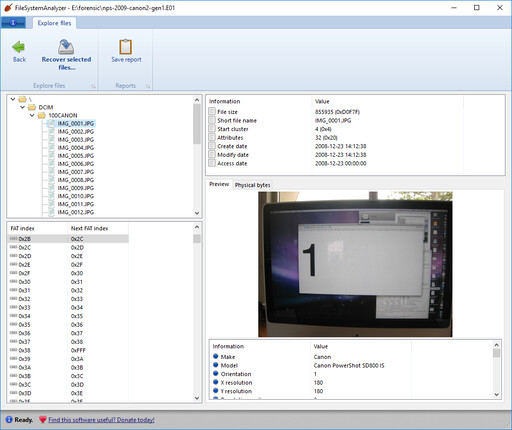

FAT

The program supports FAT12, FAT16, and FAT32 partitions. When looking at FAT partitions, you can see the file and directory structure, and detailed metadata and previews of files that you select. When selecting a file or folder, you can also see its position in the FAT table. And indeed you can explore the entire FAT table independently of the directory structure, to see how the table is structured and how it relates to the files and directories:

exFAT

Similarly to FAT, this lets you explore the exFAT file system, while also letting you look at the actual FAT table and see how each file corresponds to each FAT entry.

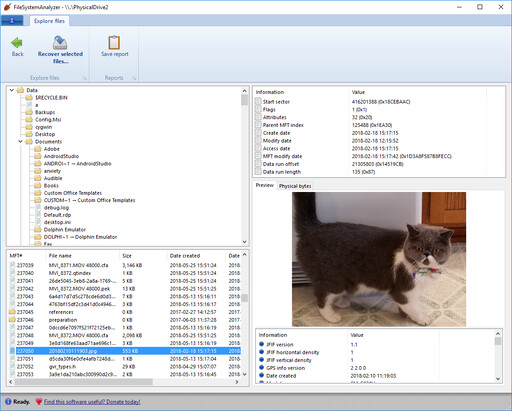

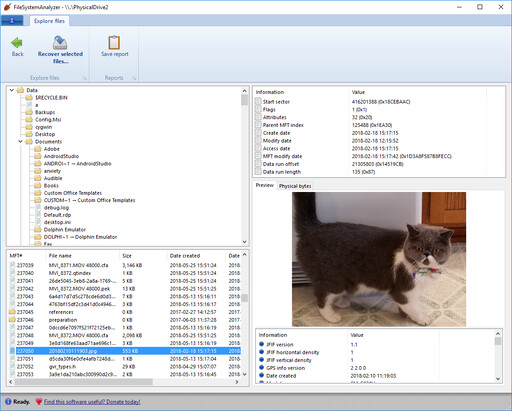

NTFS

In addition to exploring the NTFS file and folder structure, you can also see the MFT table, and which MFT entry corresponds to which file or folder:

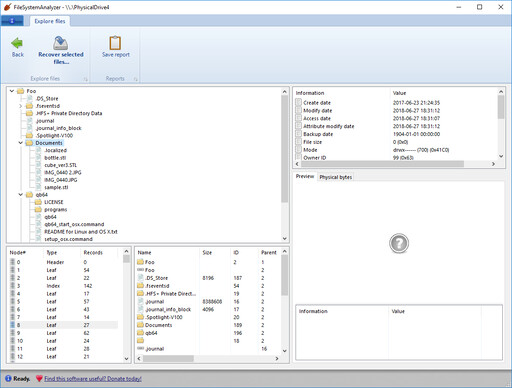

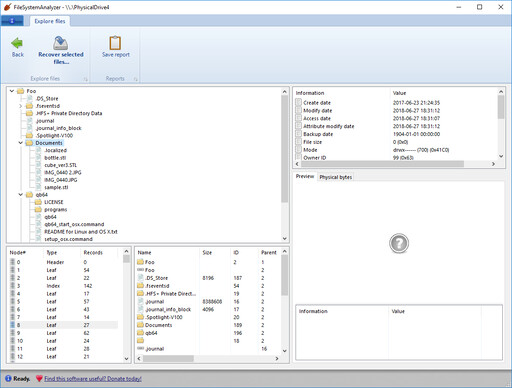

HFS and HFS+

HFS+ is the default file system used in macOS (although it is slowly being superseded by APFS), and is fully supported in FileSystemAnalyzer. Of course older versions of HFS are also supported. You can explore the folders and files in an HFS or HFS+ partition, and you can also see the actual B-Tree nodes and node contents that correspond to each file:

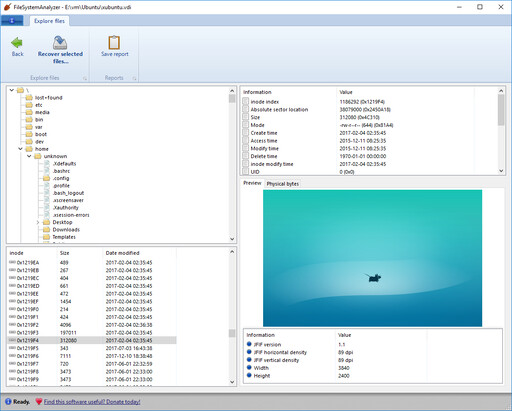

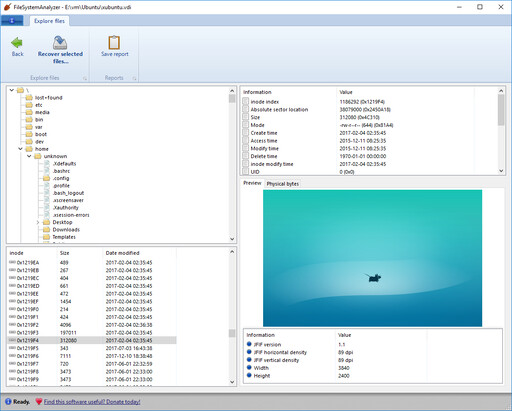

ext4

Ext4 partitions (used in Linux and other *nix operating systems) are also supported in FileSystemAnalyzer. In addition to exploring the folders and files, you can also see the actual inode table, and observe how the inodes correspond to the directory structure:

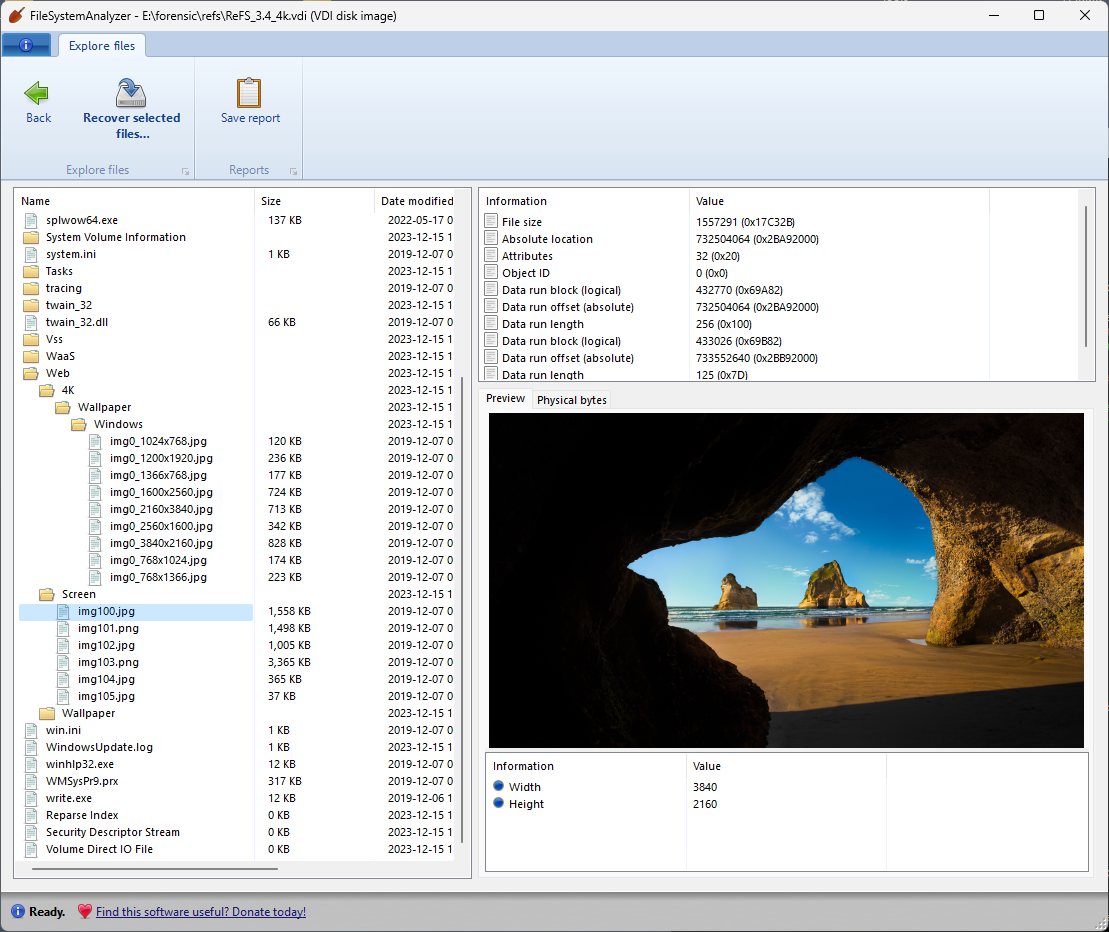

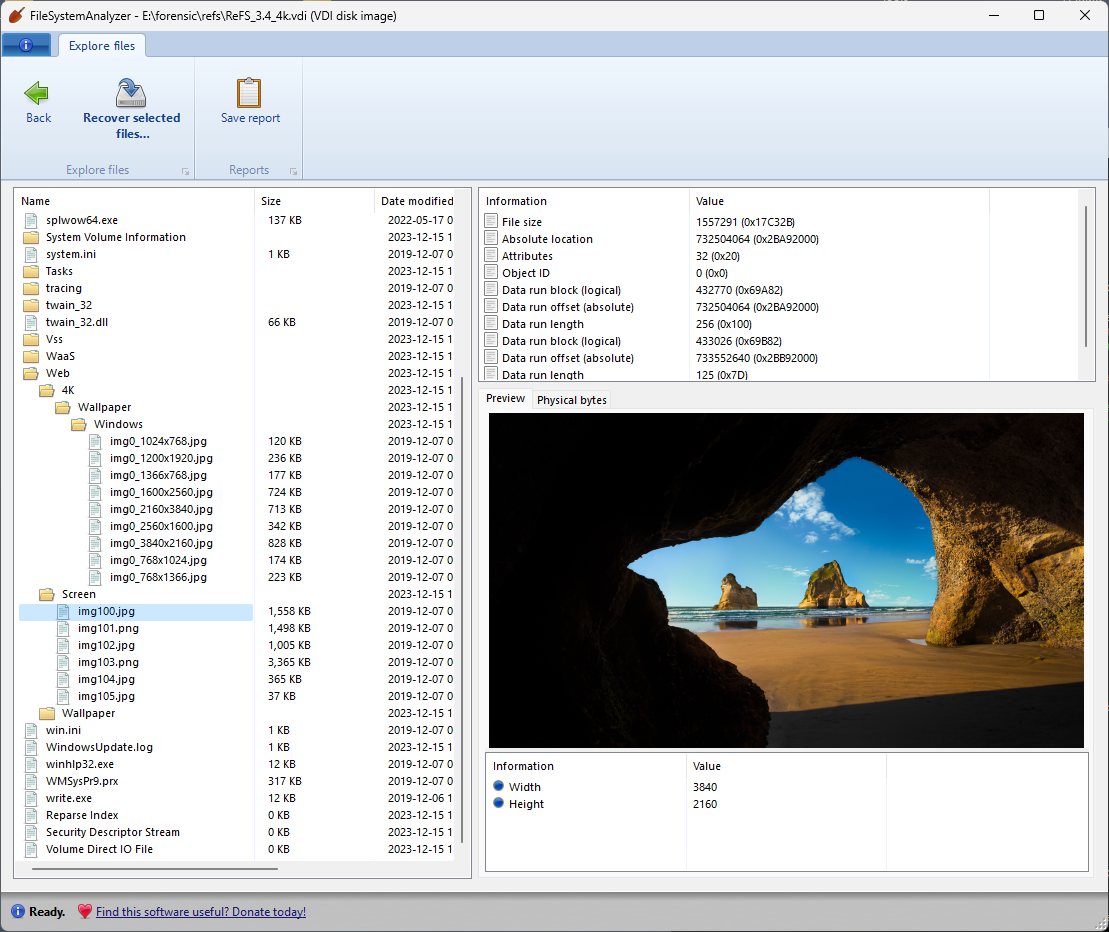

ReFS

This tool supports browsing ReFS (Resilient File System) volumes (formatted with ReFS versions 3.0 and above), even on versions of Windows that don’t support ReFS on their own.

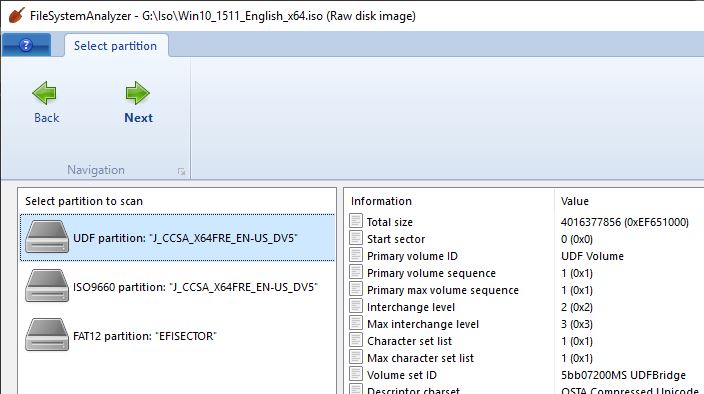

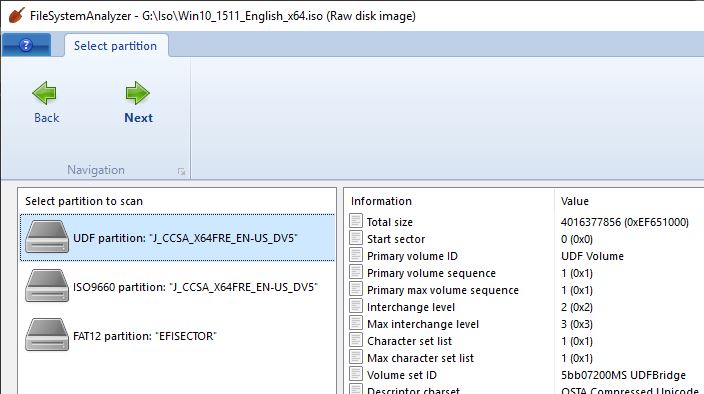

ISO 9660 / Joliet

ISO 9660 is the original and simplest file system on CD and DVD disks, and Joliet is Microsoft’s extension onto ISO 9660 that adds support for Unicode file names. FileSystemAnalyzer allows you to browse a Joliet file system as either Joliet or ISO 9660 by letting you select which volume descriptor to use.

UDF

The UDF file system is also fully supported, which is the modern file system generally used on DVD and Blu-ray disks. When opening a disk or disk image, you can choose to open the UDF file system or the stub ISO 9660 volume that usually accompanies it.

UDF can also be used on regular disks, not just optical disks. (Pro tip: it’s actually possible to format any disk as UDF by executing this command in an elevated command prompt: format <drive>: /fs:UDF)

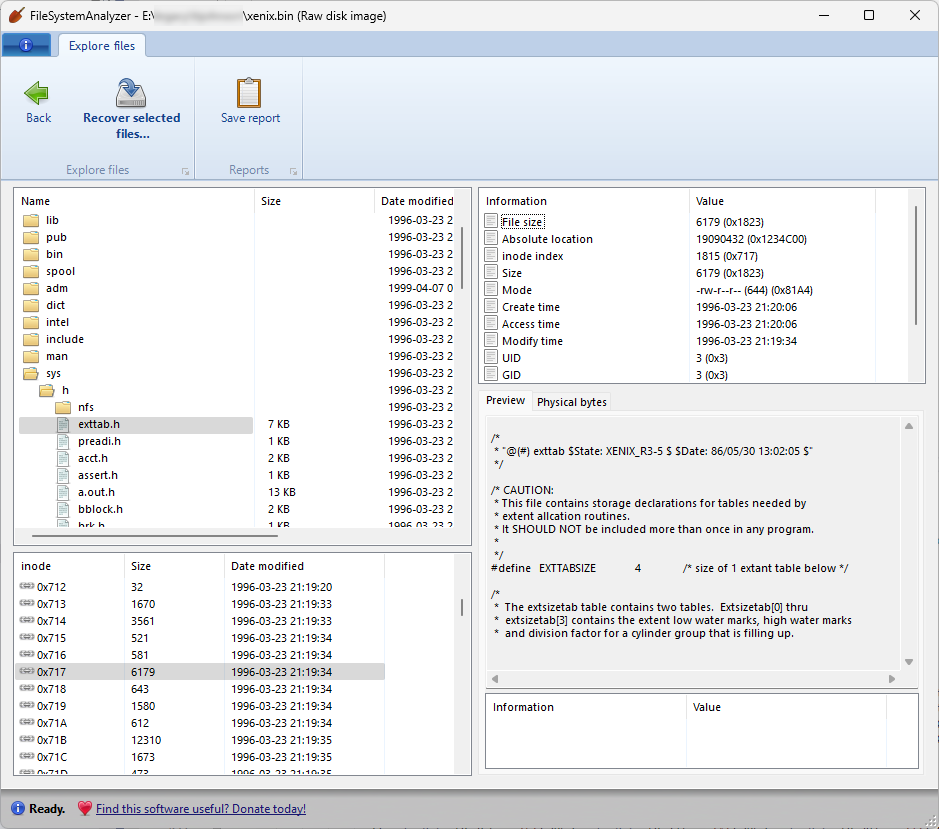

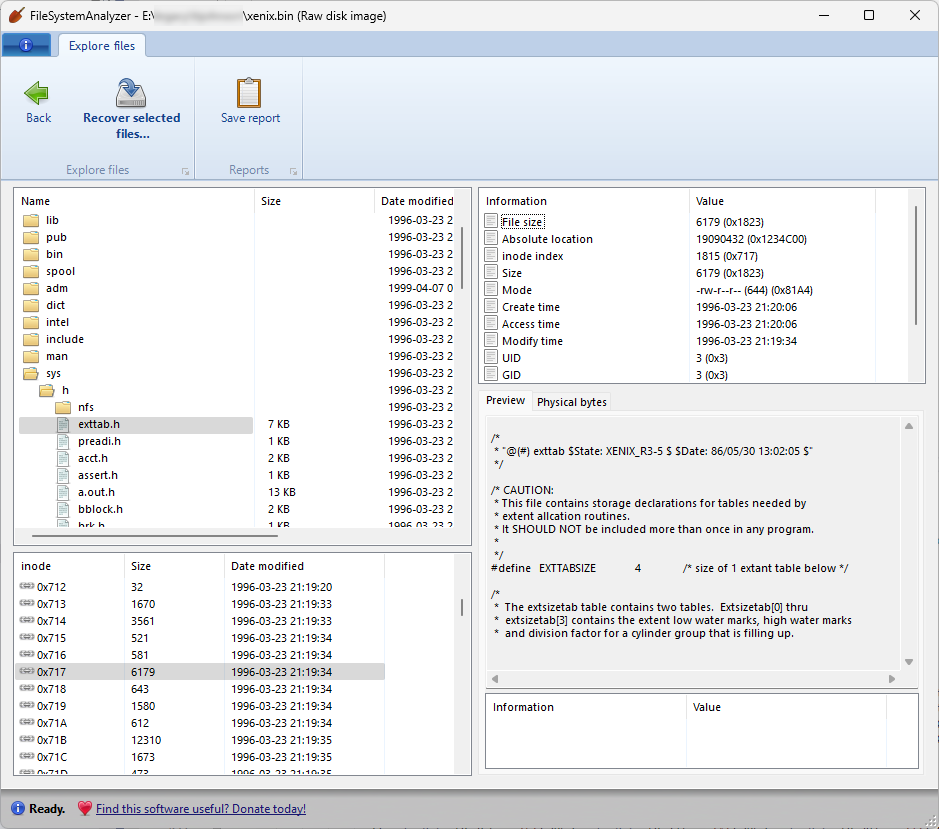

UFS, Xenix, Ultrix, Minix

It can read a variety of inode-based filesystems used in Unix and similar operating systems, including the standard UFS and UFS2, as well as more ancient Xenix and Ultrix filesystems, and let you parse their contents and traverse the inode list. Regarding Xenix and Ultrix, note that support for these systems is based only on disk images I’ve seen in the wild. Since these filesystems tended to change significantly from one version to another, or from one architecture to another, it is possible that your specific version might not be supported. If you have an old disk image that FileSystemAnalyzer can’t read, let me know.

APFS

Support for APFS is still very rudimentary, since there isn’t yet any official documentation on its internal structure, and requires some reverse engineering. Nevertheless, support for APFS is planned for a near-future update.

Disk images

The program supports E01 (EWF) disk images, as well as VHD (Microsoft Virtual Hard Disk), VDI (from VirtualBox), VMDK (from VMware), ISO files (CD/DVD images) and of course plain dd images.

Creating reports

Given the exhaustiveness of the information that this tool presents about the file system that it’s reading, there’s no end to the types of reports that it could generate. Before committing to specific type(s) of reports for the program to create, I’d like to get some feedback from other forensics specialists on what kind of information would be the most useful. If you have any suggestions on what to include in reports, please contact me.

Limitations

For now, FileSystemAnalyzer is strictly a read-only tool: it lets you read files and folders from a multitude of partitions, but does not let you write new data to them. In some ways this can actually be beneficial (especially for forensics purposes), but is clearly a limitation for users who might want to use the tool to add or modify files in partitions that are not supported natively by the operating system.

Feedback

I’d love to hear what you think of FileSystemAnalyzer so far, and any ideas that you might have for new features or improvements. If you have any suggestions, feel free to contact me!

Download FileSystemAnalyzer