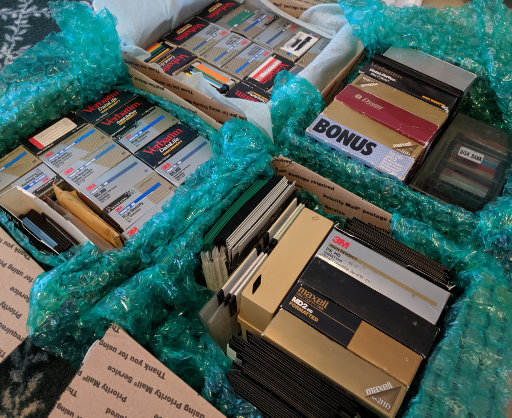

I’ve got another recent data recovery job that is worth mentioning! (Quick reminder: I offer a service to read data from super-old media such as tapes and floppies) This particular client had a number of QIC-80 tapes from the mid-1990s that contained backups of a desktop workstation.

To read QIC-80 tapes, I have an ancient Colorado 250MB drive which I acquired a while ago, but here’s the problem: this drive connects to the floppy disk controller on the motherboard, which means that it must need very specific drivers or software to communicate with the drive.

In Linux, the way to communicate with such drives is using the ftape driver, which used to be included with the Linux kernel. However, ftape was removed from the kernel in 2006, citing too many bugs and too few users.

Since the tape’s label indicated that it came from a PC system, I decided to try keep it simple, and to recreate an actual DOS PC with this drive attached to the floppy controller. All I would need is to find the software that might communicate with this drive properly. After some serious dredging of old internet forums, I found a download site that contains the oldest versions of the Colorado backup software.

Setting up the boot disk

As I’ve done in the past, I used bochs to create a generously-sized disk image of 1 GB, and installed MS-DOS 6.22 onto it within the emulated environment of bochs. Then I copied over the installation files for the Colorado backup software. (I planned to do the actual installing of the Colorado software on the live PC instead of the emulated PC, since it might do some of the hardware detection during setup.)

Once the emulated disk image was complete, I wrote it onto a USB flash drive, plugged the drive into the old PC with the tape drive attached, and booted from the USB drive. (Thankfully the old PC has a very versatile motherboard that can boot from pretty much anything.)

The old PC booted successfully into MS-DOS, and I proceeded to set up the Colorado backup software. This did not present any issues, and the setup completed successfully without any non-default configuration.

Restoring

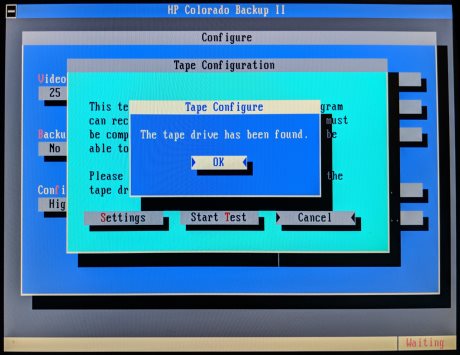

When I ran the backup software, it went through a first-time setup process where the first sign of hope appeared: the software said that the tape drive was detected!

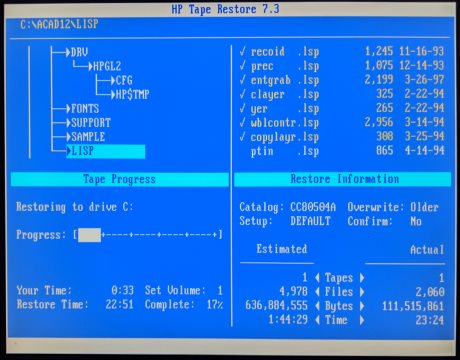

The next step was to rebuild the backup catalog from the tape, while praying that the catalog is compatible with this version of the backup software. And what do you know – the catalog rebuilt successfully, and I could see the directory tree of the backup. The final step is to perform the actual restoring of the files, which I did directly onto the current boot disk.

There was not a single hiccup during the actual reading of the tape. I continue to be amazed by the resiliency of tape backup media, as well as the durability of the drive hardware, which still works flawlessly after 25 years.

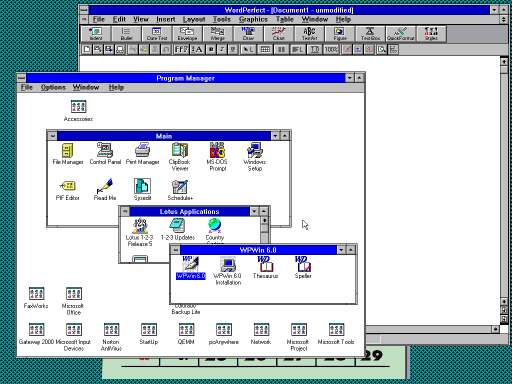

This tape was a full backup of a PC workstation in 1996, so the final “bonus” step is to boot into the backup within an emulated environment, and see this workstation running in all its glory: