Recently I had the opportunity to play around with Project Tango from Google:

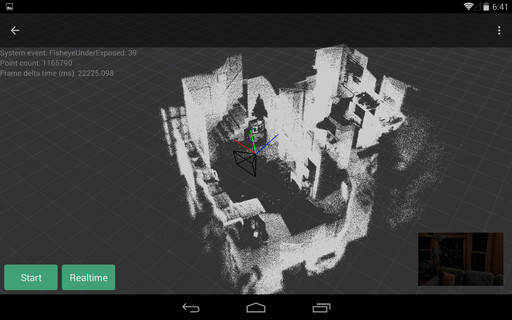

I whipped up a quick demo, based on the existing sample code from Google, that takes the real-time point cloud provided by the depth sensor and “records” it into a large buffer of points and displays all of it in real time (allowing you to move around a 3D area and record its geometry), and also allows you to save the point cloud to a file, for loading into an application such as Blender.

Here are some screenshots of the demo, after I used it to record the inside of my living room: